News - Events

Epica introduces 'AIJE', its Artificial Intelligence Jury Experiment to explore the potential of AI in assessing and understanding creative ideas

January 9, 2024

.jpg) Advertisement

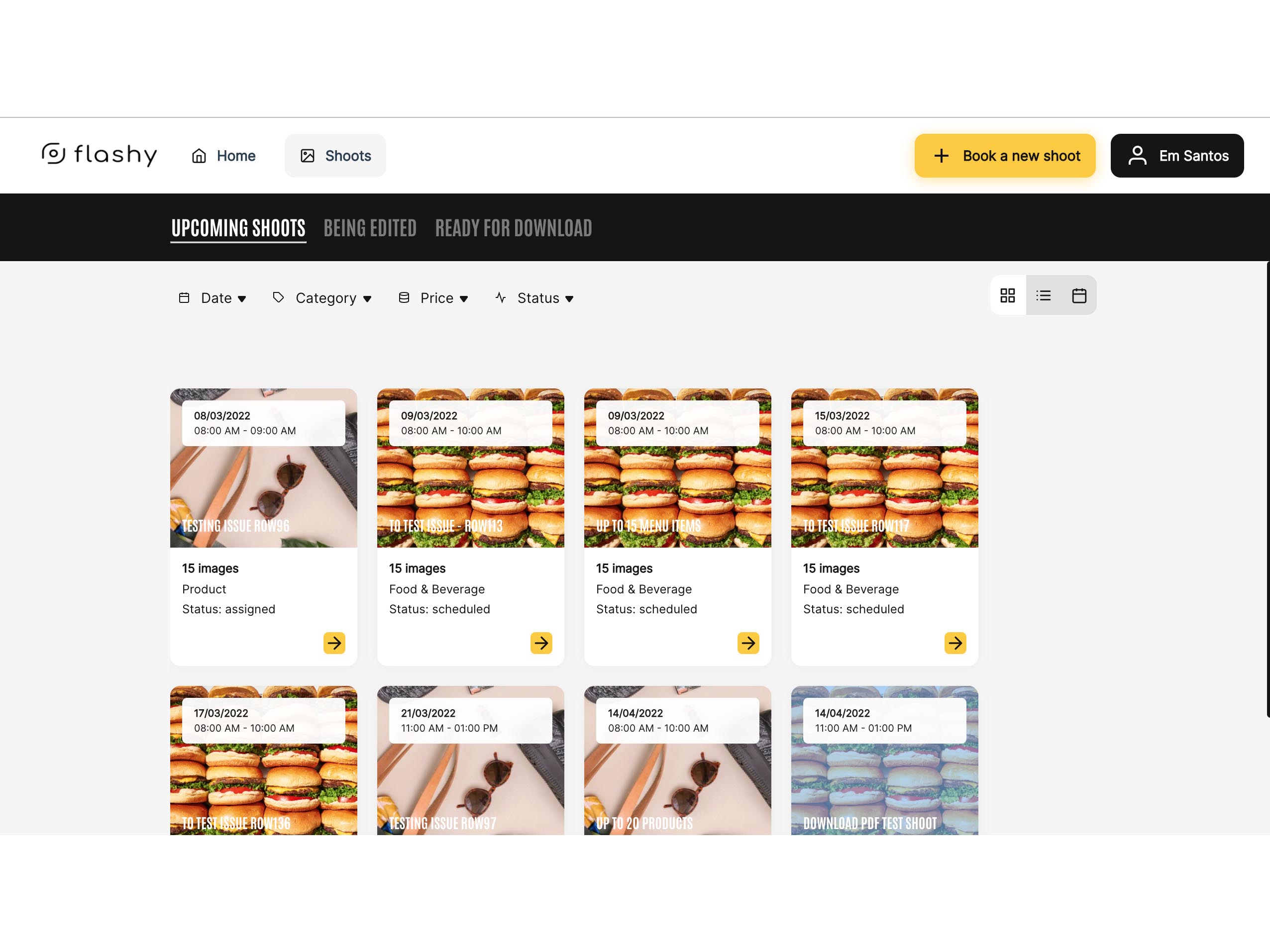

AdvertisementIn a topical initiative, The Epica Awards – known for over 30 years as the creative prize judged by journalists – created "AIJE", its Artificial Intelligence Jury Experiment. The project aimed to explore the potential of AI in assessing and understanding creative ideas.

Methodology

The experiment was run in parallel to the 2023 competition and its outcome was not incorporated into the main awards, which are judged by a panel of over 150 human journalists.

Mark Tungate, Epica Awards Editorial Director commented: “Our press jury, keen on documenting topical events, recognized AI as a pressing issue. This led us to join the conversation with a light-hearted yet thought-provoking experiment."

Even so, the AI evaluation process was rigorous. For this first version, it relied solely on the text descriptions of campaigns provided by entrants. It was also confined to shortlisted entries in categories that lent themselves to textual explanation. A standardization tool was provided to entrants to help them distil creative concepts into succinct descriptions that could be easily processed by the AI.

Nicolas Huvé, Epica Awards Operation Director and AIJE's creator; commented: "Relying solely on text description has its advantages as it is somewhat more democratic. After all, a good idea should be able to be summed up as an ‘elevator pitch’.”

The descriptions for all entries were bundled by category and fed to the latest GPT4-Turbo API along with a prompt that included the category description as well as the Epica Awards' scoring scale, ranging from 1 (Damaging) to 10 (World Beating). This ensured that the AI's evaluations were consistent with the criteria used by human jurors.

The AI then generated scores as well as a text justification for its choice for each entry. A process that ran not just once, but 80 times, all figures averaged using interquartile range (IQR), a method that eliminates outliers and capture the central tendency of the scores. The 80 text justifications were also synthetized to produce overarching commentaries on each campaign by the AI.

Results

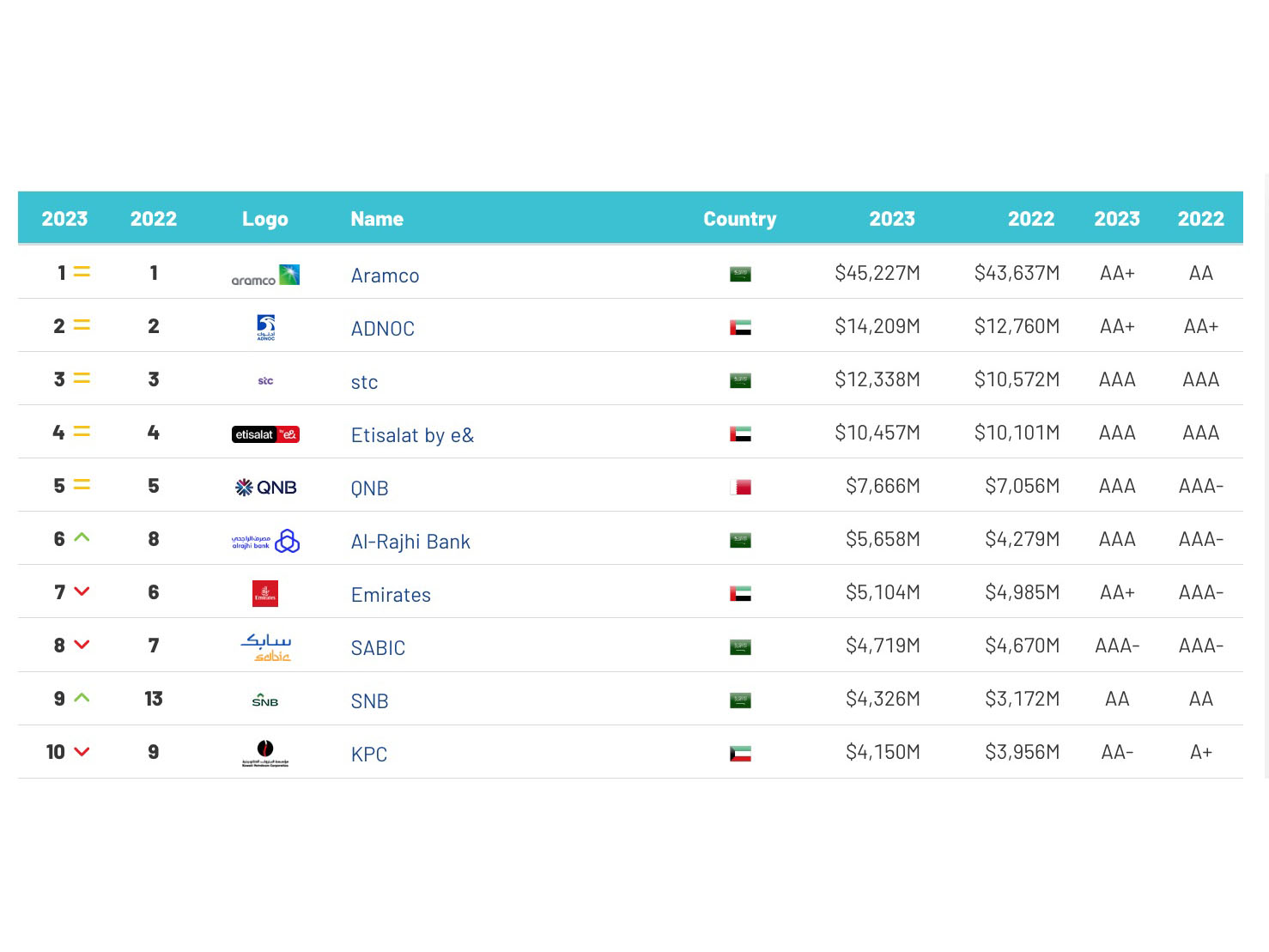

The AIJE experiment revealed a modest correlation with human voting patterns, as indicated by a correlation coefficient of about 0.25.

Nicolas Huvé commented: "Our initial tests showed a promising correlation with human scores, especially in the lower tier. However, in the live experiment, we focused only on the shortlist, leading to a notable discrepancy, though not surprising, as all these entries were already deemed high quality by a human jury."

AI scores were higher, averaging 7.45, in contrast to human scores, which averaged 6.60. This trend highlights a fundamental difference in the evaluation approach.

"Journalists, known for their critical analysis, are generally tougher in their scoring. In contrast, AIJE tended to be more easily impressed. In the jury room, journalists could identify ideas that had been done before in some way, whereas AIJE perceived novelty," notes Huvé.

This difference underscores the deeper understanding journalists have in identifying originality. But AIJE was more impartial.

Huvé adds: "AIJE seemed more efficient at evaluating a campaign strictly within the scope of its category. Unlike human jurors, who may give higher or lower scores to work they personally prefer or dislike, AIJE was not influenced by such human biases."

To illustrate the human factor in evaluation, Huvé cites the example of 'The X-Tinction Timeline' by McCann Worldgroup Germany, a clever post juxtaposing the rebranding of the Twitter bird into "X" with animal extinction.

The AI commented: "A powerful and market-leading campaign that smartly rides the wave of a current event to address a pressing global issue. The creative parallel drawn between Twitter's rebranding and wildlife extinction effectively combines pop culture with environmental activism".

A human juror was more nuanced on the voting platform: "Very clever way to harness and redirect outrage. If no PR is bad PR, then it benefitted X as well, unfortunately. Hopefully, it converted into donations for WWF and not just attention to Musk's hubris."

The work went on to win Silver in the Topical & Real Time category at the Epica Awards.

The experiment provides valuable insights into AI's potential role in the assessment of creativity. Subsequent versions of AIJE will include more categories as well as visuals. “We can now have it not only look at images but watch and interpret entire case study videos, which opens promising avenues for its future", Huvé commented, "While we do not exclude training a model exclusively on awards results, we would rather let AIJE rely on a general AI, which is where I think the field is moving toward, and which is also more inline with the outsider spirit of the Epica Awards, keeping away from the "feedback loop" of the creative industry."

For the 2024 Epica Awards, entrants will be automatically eligible to participate in the next iteration of AIJE.

.jpg)